The original version of this design doc was created at Render in November 2022. This abridged version is provided as an in-depth supplement to this blog post.

Our free-tier infrastructure creates a lot of overhead for the K8s networking machinery.

What this doc will explore is reducing the number of K8s Services tied to each Revision to 0. The implication is huge: each free-tier service a user creates would contribute to networking overhead only in Pod churn, which is small compared to what they contribute in Services and Endpoints updates.

- This lowers calico and kube-proxy CPU. Keeps our systems stable

- We've also observed a possible "max number of services a cluster can support", and removing free-tier from that equation could mean each cluster being able to support more paid-tier customers, without impacting free-tier users.

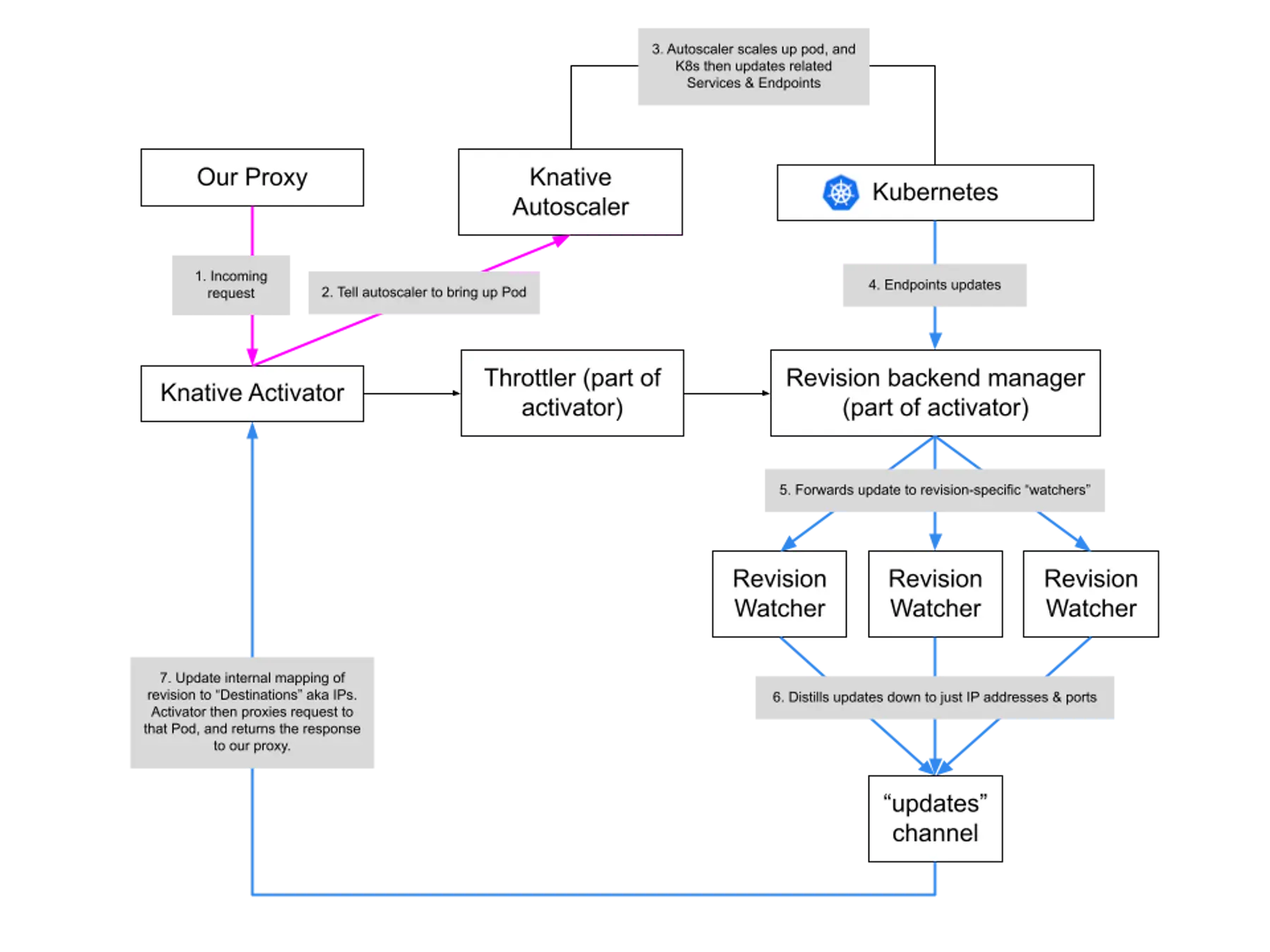

Before outlining the solution, first let's discuss how the Knative Activator works.

How the Knative Activator Works

This section is very technical because I want to preserve context from my adventure into the Knative source code, for future me and future you.

When the Activator Receives a Request

Simplified code:

a.Throttler holds an in-memory map of revisionID → revisionThrottler. It's responsible for throttling requests to a particular revision, doing in-memory load-balancing across revisions, etc.

Most importantly, each revisionThrottler keeps track of podTrackers and assignedTrackers; for the purposes of our free-tier infra, those are the same. In other words, they keep track of the pods backing a revision. Each tracker points to a dest, which is what gets "passed back" by Try. dest is simply a destination to proxy the request, looks like http://1.2.3.4:8012.

So the code here is simply: "Is there a pod backing the revision? If so, pass it back to me so I can proxy the request".

How Each revisionThrottler Maintains podTrackers

Each activator runs one Throttler, which is a.throttler above. How a Throttler works:

- The

Throttlercreates a "Revision Backends Manager", which is an informer listening for Endpoints changes. On each update, it figures out which Revision the updates are related to, and forwards that update to a channel specific to that Revision,rw.destsCh. There is one of these channels for each Revision. - The

revisionWatcherlistening to that channel then distills that Endpoints update to one that involves only clusterIP and destination changes, aka the stuff that backs a Revision. It forwards this smaller update to a global channel,rw.updateCh. This channel is global to theThrottler. - After it's created, the

ThrottlerisRun, where it listens to updates on thatupdateChchannel. When it receives an update, it finds the relevantrevisionThrottlerand updates its internal state. This data is then available and used when serving the request (previous section).

Removing the -private K8s Service

Because the Throttler only handles Endpoints updates, we must create a K8s Service for each Pod (this is the Service whose named has the suffix -private). If we delete this Service, then the underlying Endpoints is deleted, the activator does not know when a Revision's backing Pod has come up, and fails to proxy the request correctly.

However, we can replace this flow with one that:

- Watches Pods instead of Endpoints

- When a Pod that is meant to back a Revision becomes Ready, forward the Pod's IP in place of

Endpoints.Addresses. If the Pod is still not ready, forward its IP in place ofEndpoints.NotReadyAddresses. The activator wants to keep track of both sets.

We can do this because we know there is at most one pod backing a Revision.

config-network Changes

We need to update the config-network configmap to:

We disable enableMeshPodAddressability because, when True, it indicates that the code should always proxy to the K8s Service.

The config change does not affect scale-to-zero behavior.

Rollout plan

To roll this out safely and gradually, we will run the Pod-based flow in parallel with the Endpoint-based one. The Pod-based flow will only run on Pods labeled with the key render.com/knative-serviceless; these same pods will be omitted from the Endpoint-based flow by patching the Knative controller to not create -private K8s Services for Revisions labeled with this key.

We can control which services will get this label, and we will have an environment variable that controls whether we apply this label at all. Then, we can slowly roll this out in production as follows:

- First, to Render-owned free-tier services. We will do a little async dogfooding.

- Then, to new free-tier services in a cluster.

- Then, to all free-tier services in that cluster, by labeling their

KServiceresources. - Repeat for each cluster.

If things go awry at any stage, we can roll back by:

- Turning "off" the environment variable and restarting the kubewatcher for that cluster.

- Manually removing the label from affected

KServiceresources.

--

If you made it all the way down here, don't forget to check out our careers page!